Metaculus FAQ

Basics

- What is Metaculus?

- What is forecasting?

- When is forecasting valuable?

- Why should I be a forecaster?

- Who created Metaculus?

- What are Metaculus Tournaments and Question Series?

- Is Metaculus a prediction market?

- Are Metaculus questions Polls?

Metaculus Questions

- What sorts of questions are allowed, and what makes a good question?

- Who creates the questions, and who decides which get posted?

- Who can edit questions?

- How can I get my own question posted?

- How do I add coauthors to my question?

- What can I do if a question I submitted has been pending for a long time?

- What can I do if a question should be resolved but isn't?

- What is a private question?

- What are the rules and guidelines for comments and discussions?

- What do "credible source" and "before [date X]" and such phrases mean exactly?

- What types of questions are there?

- What are question groups?

- What are Conditional Pairs?

- How do I find certain questions on Metaculus?

Question Resolution

- What are the "open date", "close date" and "resolve date?"

- What timezone is used for questions?

- Who decides the resolution to a question?

- What are "Ambiguous" and "Annulled" resolutions?

- Do all questions get resolved?

- When will a question be resolved?

- Is the background material used for question resolution?

- What happens if the resolution criteria of a question is unclear or suboptimal?

- Can questions be re-resolved?

- What happens if a question gets resolved in the real world prior to the close time?

- When should a question specify retroactive closure?

- What happens if a question's resolution criteria turn out to have been fulfilled prior to the opening time?

- What happens if a resolution source is no longer available?

- What are Resolution Councils?

Predictions

- Is there a tutorial or walkthrough?

- How do I make a prediction? Can I change it later?

- How do I use the range interface?

- How is the Community Prediction calculated?

- What is the Metaculus Prediction?

- What are public figure predictions?

- What is "Reaffirming" a prediction?

Scores and Medals

Metaculus Journal

Miscellany

- What are Metaculus Pro Forecasters?

- Does Metaculus have an API?

- How do I change my username?

- I’ve registered an account. Why can’t I comment on a question?

- Understanding account suspensions

- Why can I see the Community Prediction on some questions, the Metaculus Prediction on others, and no prediction on some others?

- What is NewsMatch?

- What are Community Insights?

- Can I get my own Metaculus?

- How can I help spread the word about Metaculus?

- How can I close my account and delete my personal information on Metaculus?

Basics

What is Metaculus?

Metaculus is an online forecasting platform and aggregation engine that brings together a global reasoning community and keeps score for thousands of forecasters, delivering machine learning-optimized aggregate forecasts on topics of global importance. The Metaculus forecasting community is often inspired by altruistic causes, and Metaculus has a long history of partnering with nonprofit organizations, university researchers and companies to increase the positive impact of its forecasts.

Metaculus therefore poses questions about the occurrence of a variety of future events, on many timescales, to a community of participating forecasters — you!

The name "Metaculus" comes from the Metaculus genus in the Eriophyidae family, a genus of herbivorous mites found in many locations around the world.

What is forecasting?

Forecasting is a systematic practice of attempting to answer questions about future events. On Metaculus, we follow a few principles to elevate forecasting above simple guesswork:

First, questions are carefully specified so that everyone understands beforehand and afterward which kinds of outcomes are included in the resolution, and which are not. Forecasters then give precise probabilities that measure their uncertainty about the outcome.

Second, Metaculus aggregates the forecasts into a simple median (community) prediction, and an advanced Metaculus Prediction. The Community Prediction is simple to calculate: it finds the value for which half of predictors predict a higher value, and half predict lower. Surprisingly, the Community Prediction is often better than any individual predictor! This principle is known as the wisdom of the crowd, and has been demonstrated on Metaculus and by other researchers. Intuitively it makes sense, as each individual has separate information and biases which in general balance each other out (provided the whole group is not biased in the same way).

Third, we measure the relative skill of each forecaster, using their quantified forecasts. When we know the outcome of the question, the question is “resolved”, and forecasters receive their scores. By tracking these scores from many forecasts on different topics over a long period of time, they become an increasingly better metric of how good a given forecaster is. We use this data for our Metaculus Prediction, which gives greater weight to predictions by forecasters with better track records. These scores also provide aspiring forecasters with important feedback on how they did and where they can improve.

When is forecasting valuable?

Forecasting is uniquely valuable primarily in complex, multi-variable problems or in situations where a lack of data makes it difficult to predict using explicit or exact models.

In these and other scenarios, aggregated predictions of strong forecasters offer one of the best ways of predicting future events. In fact, work by the political scientist Philip Tetlock demonstrated that aggregated predictions were able to outperform professional intelligence analysts with access to classified information when forecasting various geopolitical outcomes.

Why should I be a forecaster?

Research has shown that great forecasters come from various backgrounds—and oftentimes from fields that have nothing to do with predicting the future. Like many mental capabilities, prediction is a talent that persists over time and is a skill that can be developed. Steady quantitative feedback and regular practice can greatly improve a forecaster's accuracy.

Some events — such as eclipse timing and well-polled elections, can often be predicted with high resolution, e.g. 99.9% likely or 3% likely. Others — such as the flip of a coin or a close horse-race — cannot be accurately predicted; but their odds still can be. Metaculus aims at both: to provide a central generation and aggregation point for predictions. With these in hand, we believe that individuals, groups, corporations, governments, and humanity as a whole will make better decisions.

As well as being worthwhile, Metaculus aims to be interesting and fun, while allowing participants to hone their prediction prowess and amass a track-record to prove it.

Who created Metaculus?

Metaculus originated with two researcher scientists, Anthony Aguirre and Greg Laughlin. Aguirre, a physicist, is a co-founder of The Foundational Questions Institute, which catalyzes breakthrough research in fundamental physics, and of The Future of Life Institute, which aims to increase the benefit and safety of disruptive technologies like AI. Laughlin, an astrophysicist, is an expert at predictions from the millisecond predictions relevant to high-frequency trading to the ultra-long-term stability of the solar system.

What Are Metaculus Tournaments and Question Series?

Tournaments

Metaculus tournaments are organized around a central topic or theme. Tournaments are often collaborations between Metaculus and a nonprofit, government agency, or other organization seeking to use forecasting to support effective decision making. You can find current and archived tournaments in our Tournaments page.

Tournaments are the perfect place to prove your forecasting skills, while helping to improve our collective decision making ability. Cash prizes and Medals are awarded to the most accurate forecasters, and sometimes for other valuable contributions (like comments). Follow a Tournament (with the Follow button) to never miss new questions.

After at least one question has resolved, a Leaderboard will appear on the tournament page displaying current scores and rankings. A personal score board ("My Score") will also appear, detailing your performance for each question (see How are Tournaments Scored?.

At the end of a tournament, the prize pool is divided among forecasters according to their forecasting performance. The more you forecasted and the more accurate your forecasts were, the greater proportion of the prize pool you receive.

Can I donate my tournament winnings?

If you have outstanding tournament winnings, Metaculus is happy to facilitate donations to various non-profits, regranting organizations, and funds. You can find the list of organizations we facilitate payments to here.

Question Series

Like Tournaments, Question Series are organized around a central topic or theme. Unlike tournaments, they do not have a prize pool.

Question Series still show leaderboards, for interest and fun. However they do **not** award medals.

You can find all Question Series in a special section of the Tournaments page.

Is Metaculus a prediction market?

Sort of. Like a prediction market, Metaculus aims to aggregate many people's information, expertise, and predictive power into high-quality forecasts. However, prediction markets generally operate using real or virtual currency, which is used to buy and sell shares in "event occurrence." The idea is that people buy (or sell) shares if they think that the standing prices reflect too low (or high) a probability in that event. Metaculus, in contrast, directly solicits predicted probabilities from its users, then aggregates those probabilities. We believe that this sort of "prediction aggregator" has both advantages and disadvantages relative to a prediction market.

Advantages of Metaculus over prediction markets

Metaculus has several advantages over prediction markets. One is that Metaculus forecasts are scored solely based on accuracy, while prediction markets may be used for other reasons, such as hedging. This means that sometimes prediction markets may be distorted from the true probability by bettors who wish to mitigate their downside risk if an event occurs.

Are Metaculus Questions Polls?

No. Opinion polling can be a useful way to gauge the sentiment and changes in a group or culture, but there is often no single "right answer", as in a Gallup poll "How worried are you about the environment?"

In contrast, Metaculus questions are designed to be objectively resolvable (like in Will Brent Crude Oil top $140/barrel before May 2022?), and forecasters are not asked for their preferences, but for their predictions. Unlike in a poll, over many predictions, participants accrue a track record indicating their forecasting accuracy. These track records are incorporated into the Metaculus Prediction. The accuracy of the Metaculus track record itself is tracked here.

Metaculus Questions

What sorts of questions are allowed, and what makes a good question?

Questions should focus on tangible, objective facts about the world which are well-defined and not a matter of opinion. “When will the United States collapse?” is a poor, ambiguous question; What will be the US' score in the Freedom in the World Report for 2050?

is more clear and definite. They generally take the form Will (event) X happen by (date) Y?

or When will (event) X occur?

or What will the value or quantity of X be by (date) Y?

A good question will be unambiguously resolvable. A community reading the question terms should be able to agree, before and after the event has occurred, whether the outcome satisfies the question’s terms.

Questions should also follow some obvious rules:

- Questions should respect privacy and not address the personal lives of non-public figures.

- Questions should not be directly potentially defamatory or generally in bad taste.

- Questions should never aim to predict mortality of individual people or even small groups. In cases of public interest (such as court appointees and political figures), the question should be phrased in other more directly relevant terms such as "when will X no longer serve on the court" or "will Y be unable to run for office on date X". When the topic is death (or longevity) itself questions should treat people in aggregate or hypothetically.

- More generally, questions should avoid being written in a way that incentivizes illegal or harmful acts — that is, hypothetically, if someone were motivated enough by a Metaculus Question to influence the real world and change the outcome of a question's resolution, those actions should not be inherently illegal or harmful.

Who creates the questions, and who decides which get posted?

Many questions are launched by Metaculus staff, but any logged-in user can propose a question. Proposed questions will be reviewed by a group of moderators appointed by Metaculus. Moderators will select the best questions submitted, and help to edit the question to be clear, well-sourced, and aligned with our writing style.

Metaculus hosts questions on many topics, but our primary focus areas are Science, Technology, Effective Altruism, Artificial Intelligence, Health, and Geopolitics.

Who can edit questions?

- Admins can edit all questions at any time (however, once predictions have begun, great care is taken not to change a question's resolution terms unless necessary).

- Moderators can edit questions when they are Pending and Upcoming (before predictions have begun).

- Authors can edit their questions when they are Drafts and Pending.

- Authors can invite other users to edit questions that are in Draft or Pending.

How do I invite co-authors to my question?

- When a question is a Draft or Pending review, click the 'Invite Co-authors' button at the top of the page.

- Co-authors can edit the question, but cannot invite other co-authors or submit a draft for review.

- Note that if two writers are in the question editor at the same time, it's possible for one to overwrite the other's work. The last edit that was submitted will be saved.

- To leave a question you have been invited to co-author, click the "Remove myself as Co-author" button.

How can I get my own question posted?

- If you have a basic idea for a question but don’t have time/energy to work out the details, you’re welcome to submit it, discuss it in our question idea thread, or on our Discord channel.

- If you have a pretty fully-formed question, with at least a couple of linked references and fairly careful unambiguous resolution criteria, it’s likely that your question will be reviewed and launched quickly.

- Metaculus hosts questions on many topics, but our primary focus areas are Science, Technology, Effective Altruism, Artificial Intelligence, Health, and Geopolitics. Questions on other topics, especially that require a lot of moderator effort to get launched, will be given lower priority and may be deferred until a later time.

- We regard submitted questions as suggestions and take a free hand in editing them. If you’re worried about having your name on a question that is altered from what you submit, or would like to see the question before it’s launched, please note this in the question itself; questions are hidden from public view until they are given “upcoming” status, and can be posted anonymously upon request.

What can I do if a question I submitted has been pending for a long time?

We currently receive a large volume of question submissions, many of which are interesting and well-written. That said, we try to approve just enough questions that they each can get the attention they deserve from our forecasters. Metaculus prioritizes questions on Science, Technology, Effective Altruism, Artificial Intelligence, Health, and Geopolitics. If your question falls into one of these categories, or is otherwise very urgent or important, you can tag us with @moderators to get our attention.

What can I do if a question should be resolved but isn't?

If a question is still waiting for resolution, check to make sure there hasn’t been a comment from staff explaining the reason for the delay. If there hasn’t, you can tag @admins to alert the Metaculus team. Please do not use the @admins tag more than once per week regarding a single question or resolution.

What is a private question?

Private questions are questions that are not visible to the broader community. They aren't subject to the normal review process, so you can create one and predict on it right away. You can resolve your own private questions at any time, but points for private predictions won't be added to your overall Metaculus score and they won't affect your ranking on the leaderboard.

You can use private questions for anything you want. Use them as practice to calibrate your predictions before playing for points, create a question series on a niche topic, or pose personal questions that only you can resolve. You can even invite up to 19 other users to view and predict on your own questions!

To invite other forecasters to your private question, click the '...' more options menu and select 'Share Private Question'.

What are the rules and guidelines for comments and discussions?

We have a full set of community etiquette guidelines but in summary:

- Users are welcome comment on any question.

- Comments and questions can use markdown formatting

- Metaculus aims at a high level of discourse. Comments should be on topic, relevant and interesting. Comments should not only state the author’s opinion (with the exception of quantified predictions). Comments which are spammy, aggressive, profane, offensive, derogatory, or harassing are not tolerated, as well as those that are explicitly commercial advertising or those that are in some way unlawful. See the Metaculus terms of use for more

- You can ping other users using "@username", which will send that user a notification (if they set that option in their notification settings).

- You are invited to upvote comments which contain relevant information to the question, and you can report comments that fail to uphold our etiquette guidelines.

- If a comment is spam, inappropriate/offensive, or flagrantly breaks our rules, please send us a report (under the "..."menu).

What do "credible source" and "before [date X]" and such phrases mean exactly?

To reduce ambiguity in an efficient way, here are some definitions that can be used in questions, with a meaning set by this FAQ:

- A "credible source" will be taken to be an online or in-print published story from a journalistic source, or information publicly posted on a the website of an organization by that organization making public information pertaining to that organization, or in another source where the preponderance of evidence suggests that the information is correct and that there is no significant controversy surrounding the information or its correctness. It will generally not include unsourced information found in blogs, facebook or twitter postings, or websites of individuals.

- The phrase "Before [date X] will be taken to mean prior to the first moment at which [date X] would apply, in UTC. For example, "Before 2010" will be taken to mean prior to midnight January 1, 2010; "Before June 30" would mean prior to midnight (00:00:00) UTC June 30.

- Note: Previously this section used "by [date x]" instead of "before [date x]", however "before" is much clearer and should always be used instead of "by", where feasible.

What types of questions are there?

Binary Questions

Binary questions can resolve as either Yes or No (unless the resolution criteria were underspecified or otherwise circumvented, in which case they can resolve as Ambiguous). Binary questions are appropriate when an event can either occur or not occur. For example, the question "Will the US unemployment rate stay above 5% through November 2021?" resolved as No because the unemployment rate dropped below 5% before the specified time.

Range Questions

Range questions resolve to a certain value, and forecasters can specify a probability distribution to estimate the likelihood of each value occurring. Range questions can have open or closed bounds. If the bounds are closed, probability can only be assigned to values that fall within the bounds. If one or more of the bounds are open, forecasters may assign probability outside the boundary, and the question may resolve as outside the boundary. See here for more details about boundaries on range questions.

The range interface allows you to input multiple probability distributions with different weights. See here for more details on using the interface.

There are two types of range questions, numeric range questions and date range questions.

Numeric Range

Numeric range questions can resolve as a numeric value. For example, the question "What will be the 4-week average of initial jobless claims (in thousands) filed in July 2021?" resolved as 395, because the underlying source reported 395 thousand initial jobless claims for July 2021.

Questions can also resolve outside the numeric range. For example, the question "What will the highest level of annualised core US CPI growth be, in 2021, according to U.S. Bureau of Labor Statistics data?" resolved as > 6.5 because the underlying source reported more than 6.5% annualized core CPI growth in the US, and 6.5 was the upper bound.

Date Range

Date range questions can resolve as a certain date. For example, the question "When will the next Public Health Emergency of International Concern be declared by the WHO?" resolved as July 23, 2022, because a Public Health Emergency of International Concern was declared on that date.

Questions can also resolve outside the date range. For example, the question "When will a SpaceX Super Heavy Booster fly?" resolved as > March 29, 2022 because a SpaceX Super Heavy booster was not launched before March 29, 2022, which was the upper bound.

What are question groups?

Question groups are sets of closely related questions or question outcomes all collected on a single page. Forecasters can predict quickly and efficiently on these interconnected outcomes, confident that they are keeping all of their predictions internally consistent.

How do question groups facilitate more efficient, more accurate forecasting?

With question groups, it's easy to forecast progressively wider distributions the further into the future you predict to reflect increasing uncertainty. A question group collecting multiple binary questions on a limited set of outcomes or on mutually exclusive outcomes makes it easier to see which forecasts are in tension with each other.

What happens to the existing question pages when they are combined in a question group?

When regular forecast questions are converted into "subquestions" of a question group, the original pages are replaced by a single question group page. Comments that previously lived on the individual question pages are moved to the comment section of the newly created group page with a note indicating the move.

Do I need to forecast on every outcome / subquestion of a question group?

No. Question groups comprise multiple independent subquestions. For that reason, there is no requirement that you forecast on every outcome within a group.

How are question groups scored?

Each outcome or subquestion is scored in the same manner as a normal independent question.

Why don't question group outcome probabilities sum to 100%?

Even if there can only be one outcome for a particular question group, the Community and Metaculus Predictions function as they would for normal independent questions. The Community and Metaculus Predictions will still display a median or a weighted aggregate of the forecasts on each subquestion, respectively. These medians and weighted aggregates are not constrained to sum to 100%

Feedback for question groups can be provided on the question group discussion post.

What are Conditional Pairs?

A Conditional Pair is a special type of Question Group that elicits conditional probabilities. Each Conditional Pair sits between a Parent Question and a Child Question. Both Parent and Child must be existing Metaculus Binary Questions.

Conditional Pairs ask two Conditional Questions (or "Conditionals" for short), each corresponding to a possible outcome of the Parent:

- If the Parent resolves Yes, how will the Child resolve?

- If the Parent resolves No, how will the Child resolve?

The first Conditional assumes that "The Parent resolves Yes" (or "if Yes" for short). The second conditional does the same for No.

Conditional probabilities are probabilities, so forecasting is very similar to Binary Questions. The main difference is that we present both conditionals next to each other for convenience:

Conditional questions are automatically resolved when their Parent and Child resolve:

- When the Parent resolves Yes, the "if No" Conditional is Annulled. (And vice versa.)

- When the Child resolves, the Conditional that was not annulled resolves to the same value.

Let’s work through an example:

- The Parent is "Will it rain today?".

- The Child is "Will it rain tomorrow?".

So the two Conditionals in the Conditional Pair will be:

- "If it rains today, will it rain tomorrow?"

- "If it does not rain today, will it rain tomorrow?"

For simplicity, Metaculus presents conditional questions graphically. In the forecasting interface they are in a table:

And in the feeds, each possible outcome of the Parent is an arrow, and each conditional probability is a bar:

Back to the example:

It rains today. The parent resolves Yes. This triggers the second conditional ("if No") to be annulled. It is not scored.

You wait a day. This time it doesn't rain. The Child resolves No. This triggers the remaining Conditional ("if Yes") to resolve No. It is scored like a normal Binary Question.

How do I create conditional pairs?

You can create and submit conditional pairs like any other question type. On the 'Create a Question' page, select Question Type 'conditional pair' and select Parent and Child questions.Note: You can use question group subquestions as the Parent or Child by clicking the Parent or Child button and then either searching for the subquestion in the field or pasting the URL for the subquestion. To copy the URL for a subquestion, simply visit a question group page and click the '...' more options menu to reveal the Copy Link option.

How do I find certain questions on Metaculus?

Questions on Metaculus are sorted by activity by default. Newer questions, questions with new comments, recently upvoted questions, and questions with many new predictions will appear at the top of the Metaculus homepage. However, there are several additional ways to find questions of interest and customize the way you interact with Metaculus.

Search Bar

The search bar can be used to find questions using keywords and semantic matches. At this time it cannot search comments or users.

Filters

Questions can be sorted and filtered in a different manner from the default using the filters menu. Questions can be filtered by type, status and participation. Questions can also be ordered, for example by “Newest”. Note that the options available change when different filters are selected. For example, if you filter by “Closed” questions you will then be shown an option to order by “Soonest Resolving”.

Question Resolution

What are the "open date", "close date" and "resolve date?"

When submitting a question, you are asked to specify the closing date (when the question is no longer available for predicting) and resolution date (when the resolution is expected to occur). The date the question is set live for others to forecast on is known as the open date.

- The open date is the date/time when the question is open for predictions. Prior to this time, if the question is active, it will have "upcoming" status, and is potentially subject to change based on feedback. After the open date, changing questions is highly discouraged (as it could change details which are relevant to forecasts that have already been submitted) and such changes are typically noted in the question body and in the comments on the question

- The close date is the date/time after which predictions can no longer be updated.

- The resolution date is the date when the event being predicted is expected to have definitively occurred (or not). This date lets Metaculus Admins know when the question might be ready for resolution. However, this is often just a guess, and is not binding in any way.

In some cases, questions must resolve at the resolution date according to the best available information. In such cases, it becomes important to choose the resolution date carefully. Try to set resolution dates that make for interesting and insightful questions! The date or time period the question is asking about must always be explicitly mentioned in the text (for example, “this question resolves as the value of X on January 1, 2040, according to source Y” or “this question resolves as Yes if X happens before January 1, 2040)”.

The close date must be at least one hour prior to the resolution date, but can be much earlier, depending upon the context. Here are some guidelines for specifying the close date:

- If the outcome of the question will very likely or assuredly be determined at a fixed known time, then the closing time should be immediately before this time, and the resolution time just after that. (Example: a scheduled contest between competitors or the release of scheduled data)

- If the outcome of a question will be determined by some process that will occur at an unknown time, but the outcome is likely to be independent of this time, then it should be specified that the question retroactively closes some appropriate time before the process begins. (Example: success of a rocket launch occurring at an unknown time)

- If the outcome of a question depends on a discrete event that may or may not happen, the close time should be specified as shortly before the resolve time. The resolve time is chosen based on author discretion of the period of interest.

Note: Previous guidance suggested that a question should close between 1/2 to 2/3 of the way between the open time and resolution time. This was necessary due to the scoring system at the time, but has been replaced by the above guidelines due to an update to the scoring system.

What timezone is used for questions?

For dates and times written in the question, such as "will event X happen before January 1, 2030?", if the timezone is not specified Coordinated Universal Time (UTC) will be used. Question authors are free to specify a different timezone in the resolution criteria, and any timezone specified in the text will be used.

For date range questions, the dates on the interface are in UTC. Typically the time of day makes little difference as one day is miniscule in comparison to the full range, but occasionally for shorter term questions the time of day might materially impact scores. If it is not clear what point in a specified period a date range question will be resolved as, it resolves as the midpoint of that period. For example, if a question says it will resolve as a certain day, but not what time of day, it will resolve as noon UTC on that day.

Who decides the resolution to a question?

Only Metaculus Administrators can resolve questions. Binary questions can resolve Yes, No, Ambiguous, or Annuled. Range questions can resolve to a specific value, an out-of-bounds value, Ambiguous, or Annuled.

What are "Ambiguous" and "Annulled" resolutions?

Sometimes a question cannot be resolved because the state of the world, the truth of the matter

, is too uncertain. In these cases, the question is resolved as Ambiguous.

Other times, the state of the world is clear, but a key assumption of the question was overturned. In these cases, the question is Annulled.

In the same way, when a Conditional turns out to be based on an outcome that did not occur, it is Annulled. For example, when a Conditional Pair's parent resolves Yes, the if No

Conditional is Annulled.

When questions are Annulled or resolved as Ambiguous, they are no longer open for forecasting, and they are not scored.

If you'd like to read more about why Ambiguous and Annulled resolutions are necessary you can expand the section below.

Why was this question Annulled or resolved as Ambiguous?

An Ambiguous or Annulled resolution generally implies that there was some inherent ambiguity in the question, that real-world events subverted one of the assumptions of the question, or that there is not a clear consensus as to what in fact occurred. Metaculus strives for satisfying resolutions to all questions, and we know that Ambiguous and Annulled resolutions are disappointing and unsatisfying. However, when resolving questions we have to consider factors such as fairness to all participating forecasters and the underlying incentives toward accurate forecasting.

To avoid this unfairness and provide the most accurate information, we resolve all questions in accordance with the actual written text of the resolution criteria whenever possible. By adhering as closely as possible to a reasonable interpretation of what's written in the resolution criteria, we minimize the potential for forecasters to arrive at different interpretations of what the question is asking, which leads to fairer scoring and better forecasts. In cases where the outcome of a question does not clearly correspond to the direction or assumptions of the text of the resolution criteria, Ambiguous resolution or Annulling the question allows us to preserve fairness in scoring.

Types of Ambiguous or Annulled Resolutions

A question's resolution criteria can be thought of as akin to a legal contract. The resolution criteria create a shared understanding of what forecasters are aiming to predict, and define the method by which they agree to be scored for accuracy when choosing to participate. When two forecasters who have diligently read the resolution criteria of a question come away with significantly different perceptions about the meaning of that question, it creates unfairness for at least one of these forecasters. If both perceptions are reasonable interpretations of the text, then one of these forecasters will likely receive a poor score at resolution time through no fault of their own. Additionally, the information provided by the forecasts on the question will be poor due to the differing interpretations.

The following sections provide more detail about common reasons we resolve questions as Ambiguous or Annul them and some examples. Some of these examples could fit into multiple categories, but we've listed them each in one main category as illustrative examples. This list of types of Ambiguous or Annulled resolutions is not exhaustive — there are other reasons that a question may resolve Ambiguous or be Annulled — but these cover some of the more common and some of the trickier scenarios. Here's a condensed version, but read on for more details:

- Ambiguous resolution. Reserved for questions where reality is not clear.

- No clear consensus. There is not enough information available to arrive at an appropriate resolution.

- Annulment. Reserved for questions where the reality is clear but the question is not.

- Underspecified questions. The question did not clearly describe an appropriate method to resolve the question.

- Subverted assumptions. The question made assumptions about the present or future state of the world that were violated.

- Imbalanced outcomes and consistent incentives. The binary question did not adequately specify a means for either Yes or No resolution, leading to imbalanced outcomes and bad incentives.

Note: Previously Metaculus only had one resolution type — Ambiguous — for cases where a question could not otherwise be resolved. We've since separated these into two types — Ambiguous and Annulled — to provide clarity on the reason that a question could not otherwise be resolved. Annulling questions first became an option in April of 2023.

Ambiguous Resolution

Ambiguous resolution is reserved for questions where reality is not clear. Either because reporting about an event is conflicted or unclear about what actually happened, or available material is silent on the information being sought. We've described the types of questions where Ambiguous resolution is appropriate as those with No Clear Consensus.

No Clear Consensus

Questions can also resolve Ambiguous when there is not enough information available to arrive at an appropriate resolution. This can be because of conflicting or unclear media reports, or because a data source that was expected to provide resolution information is no longer available. The following are some examples where there was no clear consensus.

- Will Russian troops enter Kyiv, Ukraine before December 31, 2022?

- This question asked if at least 100 Russian troops would enter Ukraine before the end of 2022. It was clear that some Russian troops entered Ukraine, and even probable that there were more than 100 Russian troops in Ukraine. However there was no clear evidence that could be used to resolve the question, so it was necessary to resolve as Ambiguous. In addition to the lack of a clear consensus, this question is also an example of imbalanced outcomes and the need to preserve incentives. As an Admin explains here, due to the uncertainty around events in February the question could not remain open to see if a qualifying event would happen before the end of 2022. This is because the ambiguity around the events in February would necessitate that the question could only resolve as Yes or Ambiguous, which creates an incentive to forecast confidently in an outcome of Yes.

- What will the average cost of a ransomware kit be in 2022?

- This question relied on data published in a report by Microsoft, however Microsoft's report for the year in question no longer contained the relevant data. It's Metaculus policy that by default if a resolution source is not available Metaculus may use a functionally equivalent source in its place unless otherwise specified in the resolution text, but for this question a search for alternate sources did not turn anything up, leading to Ambiguous resolution.

Annulment

Annulling a question is reserved for situations where reality is clear but the question is not. In other words, the question failed to adequately capture a method for clear resolution.

Note: Annulment was introduced in April of 2023, so while the following examples describe Annulment the questions in actuality were resolved as Ambiguous.

The Question Was Underspecified

Writing good forecasting questions is hard, and it only gets harder the farther the question looks into the future. To fully eliminate the potential for a question to be Annulled the resolution criteria must anticipate all the possible outcomes that could occur in the future; in other words, there must be clear direction for how the question resolves in every possible outcome. Most questions, even very well-crafted ones, can't consider every possible outcome. When an outcome occurs that does not correspond to the instructions provided in the resolution criteria of the question then that question may have to be Annulled. In some cases we may be able to find an interpretation that is clearly an appropriate fit for the resolution criteria, but this is not always possible.

Here are some examples of Annulment due to underspecified questions:

- What will Substack's Google Trends index be at end of 2022?

- This question did not clearly specify how Google trends would be used to arrive at the average index for December of 2022, because the index value depends on the date range specified in Google Trends. An Admin provided more details in this comment.

- When will a fusion reactor reach ignition?

- This question did not clearly define what was meant by “ignition”. As an Admin described in this comment, the definition of ignition may vary depending on the researchers using it and the fusion method, as well as the reference frame for what counts as an energy input and output.

- Will Russia order a general mobilization by January 1, 2023?

- This question asked about Russia ordering a general mobilization, but the difficult task of determining that a general mobilization was ordered was not adequately addressed in the resolution criteria. The text of the question asked about a “general mobilization”, but the definitions used in the resolution criteria differed from the common understanding of a “general mobilization” and didn’t adequately account for the actual partial mobilization that was eventually ordered, as explained by an Admin here.

The Assumptions of the Question Were Subverted

Questions often contain assumptions in their resolution criteria, many of which are unstated. For example, assuming that the underlying methodology of a data source will remain the same, assuming that an organization will provide information about an event, or assuming that an event would play out a certain way. The best practice is to specify what happens in the event certain assumptions are violated (including by specifying that the question will be Annulled in certain situations) but due to the difficulty in anticipating these outcomes this isn't always done.

Here are some examples of Annulment due to subverted assumptions:

- Will a technical problem be identified as the cause of the crash of China Eastern Airlines Flight 5735?

- This question relied on the conclusions of a future National Transportation Safety Board (NTSB) report. However, it was a Chinese incident so it was unlikely that the NTSB would publish such a report. Additionally, the question did not specify a date by which the report must be published resulting in a resolution of No. Since this was not specified and the assumption of a future NTSB report was violated the question was Annulled, as explained by an Admin here.

- What will the Federal Reserves' Industrial Production Index be for November 2021, for semiconductors, printed circuit boards and related products?

- This question did not provide a description of how it should resolve in the event the underlying source changed its methodology. It anticipated the possibility of the base period changing, however, the entire methodology used to construct the series changed before this question resolved, not just the base period. Because the unwritten assumption of a consistent methodology was violated, the question was Annulled.

- When will Russia's nuclear readiness scale return to Level 1?

- Media reporting about Russia's nuclear readiness level gave the impression that the level had been changed to level 2, leading to the creation of this question. However, a more thorough investigation found that Russia's nuclear readiness most likely did not change. This violated the assumption of the question leading to the question being Annulled, as explained by an Admin here.

- What will be the Biden Administration's social cost of 1 ton of CO2 in 2022?

- This question specified that it would resolve according to a report published by the US Interagency Working Group (IWG), however the IWG did not publish an estimate before the end of 2022. This question anticipated this outcome and appropriately specified that it should be Annulled if no report was published before the end of 2022, and the question was resolved accordingly.

Imbalanced Outcomes and Consistent Incentives

Sometimes questions imply imbalanced outcomes, for example where the burden of proof for an event to be considered to have occurred is high and tips the scales toward a binary question resolving No, or where the question would require a substantial amount of research to surface information showing that an event occurred, which also favors a resolution of No. In certain circumstances these kinds of questions are okay, so long as there is a clear mechanism for the question to resolve as Yes and to resolve as No. However, sometimes questions are formulated such that there's no clear mechanism for a question to resolve as No, leading to the only realistic outcomes being a resolution of Yes or Annulled. This creates a bias in the question and also produces bad incentives if the question isn't Annulled.

The case of imbalanced outcomes and consistent incentives is best explained with examples, such as the following:

- Will any prediction market cause users to lose at least $1M before 2023?

- This question asks whether certain incidents such as hacking, scams, or incorrect resolution lead to users losing $1 million or more from a prediction market. However, there's no clear mechanism specified to find information about this, as prediction markets aren't commonly the subject of media reports. Concretely proving that this did not occur would require extensive research. This creates an imbalance in the resolution criteria. The question would resolve as Yes if there was a clear report from credible sources that this occurred. However, to resolve as No it would require extensive research to confirm that it didn't occur and a knowledge of the happenings in prediction markets that most people do not possess. To resolve as No Metaculus would either have to do an absurd amount of research, or assume that a lack of prominent reports on the topic is sufficient to resolve as No. In this case the question had to be Annulled.

- Now consider if there had been a clear report that this had actually occurred. In a world where that happened the question could arguably have been resolved as Yes. However, savvy users who follow our methods on Metaculus might realize that when a mechanism for a No resolution is unclear, that the question will then resolve as Yes or be Annulled. This creates bad incentives, as these savvy forecasters might begin to raise the likelihood of Yes resolution on future similar forecasts as they meta-predict how Metaculus handles these questions. For this reason, binary questions must have a clear mechanism for how they resolve as both Yes and No. If the mechanism is unclear, then it can create bad incentives. Any questions without a clear mechanism to resolve as both possible outcomes should be Annulled, even if a qualifying event occurs that would resolve the question as Yes.

- Will any remaining FTX depositor withdraw any amount of tradeable assets from FTX before 2023?

- This question asked if an FTX depositor would withdraw assets where the withdrawal was settled by FTX. Unfortunately this question required a knowledge of the details of FTX withdrawals that was unavailable to Admins, resulting in there being no real mechanism to resolve the question as No. This led to an imbalance in possible outcomes, where the question could only truly resolve as Yes or be Annulled. The imbalance necessitated that the question be resolved as Ambiguous to preserve consistent incentives for forecasting.

Do all questions get resolved?

Currently, all questions will be resolved.

When will a question be resolved?

Questions will be resolved when they have satisfied the criteria specified in the resolution section of the question (or conversely, when those criteria have conclusively failed to be met). Each question also has a “Resolution Date” listed in our system for purposes such as question sorting; however, this listed date is often nothing more than an approximation, and the actual date of resolution may not be known in advance.

For questions which ask when something will happen (such as When will the first humans land successfully on Mars?

) forecasters are asked to predict the date/time when the criteria have been satisfied (though the question may be decided and points awarded at some later time, when the evidence is conclusive). Some questions predict general time intervals, such as “In which month will unemployment pass below 4%?”; when such a question has specified the date/time which will be used, those terms will be used. If these terms are not given, the default policy will be to resolve as the midpoint of that period (for example, if the January report is the first month of unemployment under 4%, the resolution date will default to January 15).

When will a question be resolved?

Questions will be resolved when they have satisfied the criteria specified in the resolution section of the question (or conversely, when those criteria have conclusively failed to be met). Each question also has a “Resolution Date” listed in our system for purposes such as question sorting; however, this listed date is often nothing more than an approximation, and the actual date of resolution may not be known in advance.

Is the background material used for question resolution?

No, only the resolution criteria is relevant for resolving a question, the background section is intended only to provide potentially useful information and context for forecasters. In a well-specified question the resolution criteria should stand on its own as a set of self-contained instructions for resolving the question. In rare cases or older questions on Metaculus the background material may be necessary to inform resolution, but the information in the resolution criteria supersedes conflicting information in the background material.

Still, we want the background material to be as helpful as possible and accurately capture the context and relevant information available at the time the question was written, so if you see errors or misleading information in the background of a question please let Admins know by tagging @admins in a comment!

What happens if the resolution criteria of a question is unclear or suboptimal?

We take care to launch questions that are as clearly specified as possible. Still, writing clear and objectively resolvable questions is challenging, and in some cases a question's resolution criteria may unintentionally allow for multiple different interpretations or may not accurately represent the question being asked. In deciding how to approach questions that have been launched with these deficiencies, Admins primarily consider fairness to forecasters. Issuing clarifications or edits for open questions can harm some forecaster's scores when the clarification significantly changes the meaning of the question. Based on an assessment of fairness and other factors, Admins may issue a clarification to an open question to better specify the meaning. This is typically most appropriate when a question has not been open for long (and therefore forecasts can be updated with negligible impact to scores), when a question contains inconsistent or conflicting criteria, or when the clarification adds specificity where there previously was none in a way that avoids substantial changes to the meaning.

In many cases such questions must be resolved as ambiguous or annulled to preserve fairness in scoring. If you believe there are ambiguities or conflicts in the resolution criteria for a question please let Admins know by tagging @admins in a comment. We hope inconsistencies can be identified as early as possible in the lifetime of a question so that they can be addressed. Claims of unclear resolution criteria made for questions which have already closed or claims of incorrect resolution for questions which have already resolved will be held to a higher standard of evidence if the issue(s) with the resolution criteria was not previously mentioned while the question was open to forecasting.

Can questions be re-resolved?

Yes, at times questions are resolved and it is later discovered these resolutions were in error given the information available at the time. Some questions may even specify that they will be resolved according to initial reporting or results, but specify re-resolution in the event that the final results disagree with the initial results (for example, questions about elections might use this mechanism to allow prompt feedback for forecasters but arrive at the correct answer in the rare event that the initial election call was wrong). Questions may be re-resolved in such cases if Metaculus determines that re-resolution is appropriate.

What happens if a question gets resolved in the real world prior to the close time?

When resolving a question, the Moderator has an option to change the effective closing time of a question, so that if the question is unambiguously resolved prior to the closing time, the closing time can be changed to a time prior to which the resolution is uncertain.

When a question closes early, the points awarded are only those accumulated up until the (new) closing time. This is necessary in order to keep scoring "proper" (i.e. maximally reward predicting the right probability) and prevent gaming of points, but it does mean that the overall points (positive or negative) may end up being less than expected.

When should a question specify retroactive closure?

In some cases when the timing of an event is unknown it may be appropriate to change the closing date to a time before the question resolved, after the resolution is known. This is known as retroactive closure. Retroactive closure is not allowed except in the case of an event where the timing of the event is unknown and the outcome of the event is independent of the timing of the event, as described in the question closing guidelines above. When the timing of the event impacts the outcome of the event retroactive closure would violate proper scoring. For scoring to be proper a question must only close retroactively when the outcome is independent of the timing of the event. Here are several examples:

- The date of a rocket launch can often vary based on launch windows and weather, and the success or failure of the launch is primarily independent of when the launch occurs. In this case retroactive closure is appropriate, as the timing of the launch is very unlikely to impact forecasts for the success of the launch.

- In some countries elections can be called earlier than scheduled (these are known as snap elections). The timing of snap elections is often up to the party in power, and elections are often scheduled at a time the incumbent party considers to be favorable to their prospects. In this case retroactive closure is not appropriate, as the timing of the election will impact forecasts for the outcome of the election, violating proper scoring.

- Previously some questions on Metaculus were approved with inappropriate retroactive closure clauses. For example, the question "When will the number of functional artificial satellites in orbit exceed 5,000?" specifies retroactive closure to the date when the 5,001st satellite is launched. In this case retroactive closure was not appropriate, because the resolution of the question was dependent on the closure date since both relied on the number of satellites launched.

Forecasters often like retroactive closure because it prevents points from being truncated when an event occurs before the originally scheduled close date. But in order to elicit the best forecasts it’s important to follow proper scoring rules. For more on point truncation this section of the FAQ.

While Metaculus will try not to approve questions which specify inappropriate retroactive closure, sometimes new or existing questions do specify it. It is the policy of Metaculus to ignore inappropriate retroactive closure when resolving questions.

What happens if a question's resolution criteria turn out to have been fulfilled prior to the opening time?

Our Moderators and question authors strive to be as clear and informed as possible on each question, but mistakes occasionally happen, and will be decided by our Admins' best judgement. For a hypothetical question like Will a nuclear detonation occur in a Japanese City by 2030?

it can be understood by common sense that we are asking about the next detonation after the detonations in 1945. In other questions like Will Facebook implement a feature to explain news feed recommendations before 2026?

, we are asking about the first occurrence of this event. Since this event occurred before the question opened and this was not known to the question author, the question resolved ambiguously.

What happens if a resolution source is no longer available?

There are times when the intent of a question is to specifically track the actions or statements of specific organizations or people (such as, "how many Electoral Votes will the Democrat win in the 2020 US Presidential Election according to the Electoral College"); at other times, we are interested only in the actual truth, and we accept a resolution source as being an acceptable approximation (such as, "how many COVID-19 deaths will there be in the US in 2021 according to the CDC?"). That said, in many cases it is not clear which is intended.

Ideally, every question would be written with maximally clear language, but some ambiguities are inevitable. Unless specifically indicated otherwise, if a resolution source is judged by Metaculus Admins to be defunct, obsolete, or inadequate, Admins will make a best effort to replace it with a functional equivalent. Questions can over-rule this policy with language such as "If [this source] is no longer available, the question will resolve Ambiguously" or "This question tracks publications by [this source], regardless of publications by other sources."

What are Resolution Councils?

Metaculus uses Resolution Councils to reduce the likelihood of ambiguous resolutions for important questions—those that we feel have the potential to be in the top 1% of all questions on the platform in terms of impact.

A Resolution Council is an individual or group that is assigned to resolve a question. Resolution Council questions resolve at the authority of the individual or individuals identified in the resolution criteria. These individuals will identify the resolution that best aligns with the question and its resolution criteria.

If a Resolution Council member is not available to resolve a question, Metaculus may choose a suitable replacement.

Predictions

Is there a tutorial or walkthrough?

Yes! Start the Metaculus forecasting tutorial here.How do I make a prediction? Can I change it later?

You make a prediction simply by sliding the slider on the question's page to the probability you believe most captures the likelihood that the event will occur.

You can revise your prediction at any time up until the question closes, and you are encouraged to do so: as new information comes to light, it is beneficial to take it into account.

You're also encouraged to predict early, however, and you are awarded bonus points for being among the earliest predictors.

How do I use the range interface?

Some Metaculus questions allow numeric or date range inputs, where you specify the distribution of probability you think is likely over a possible range of outcomes. This probability distribution is known as a probability density function and is the probability per unit of length. The probability density function can be used to determine the probability of a value falling within a range of values.

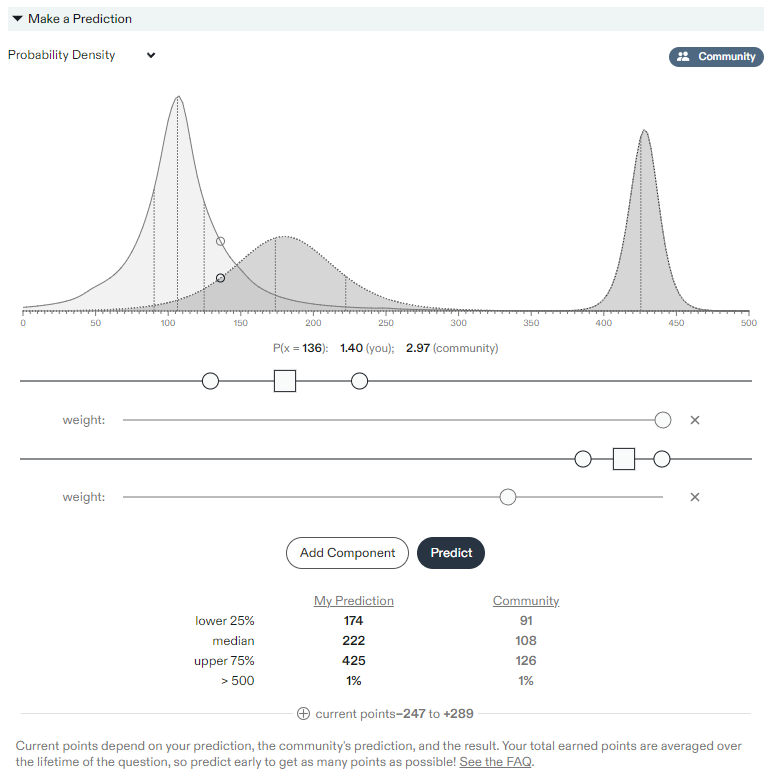

When you hover over the chart you see the probabilities at each point at the bottom of the chart. For example, in the image below you can see the probability density at the value 136, denoted by “P(x = 136)”, and you can see the probability density that you and the community have assigned to that point (in the image the user has assigned a probability density of 1.40 to that value and the community has assigned a probability density of 2.97).

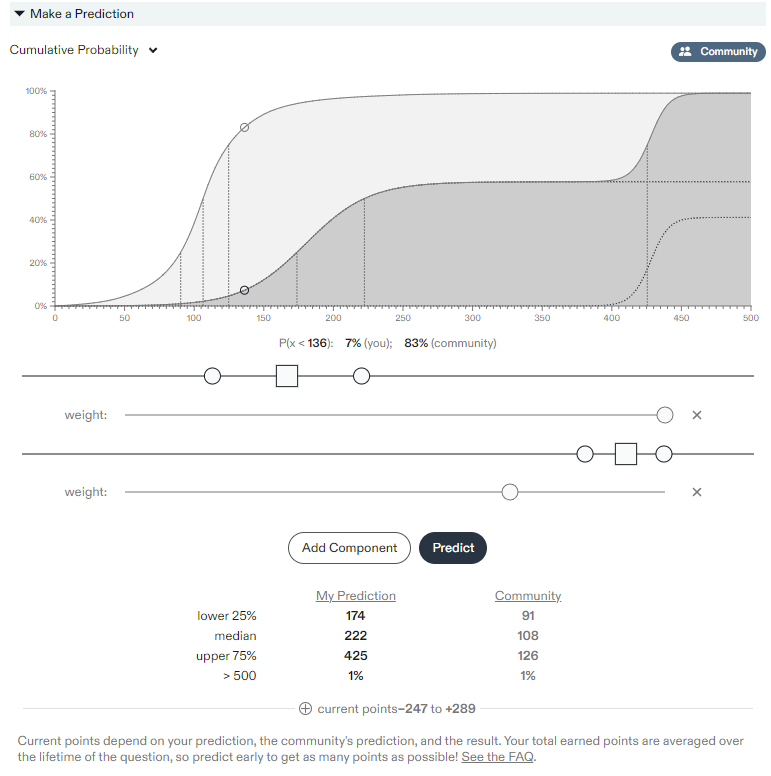

By selecting the “Probability Density” dropdown at the top of the chart you can change the display to “Cumulative Probability”. This display shows the cumulative distribution function, or in other words for any point it shows you the probability that you and the community have assigned to the question resolving below the indicated value. For example, in the image below you can see the probability that you and the community have assigned to the question resolving below the value of 136, denoted by “P(x < 136)”. The probability that the user has assigned is 7% to the question resolving below that value, while the community has assigned an 83% chance to the question resolving below that value.

The vertical lines shown on the graphs indicate the 25th percentile, median, and 75th percentile forecasts, respectively, of the user and the community. These values are also shown for the user and the community in the table at the bottom.

Out of Bounds Resolution

In the table showing the predictions at the bottom of the images above, you will see that in addition to the 25th percentile, median, and 75th percentile probabilities there is also one labeled "> 500". This question has an open upper bound, which means forecasters can assign a probability that the question will resolve as a value above the upper end of the specified range. For the question depicted above the community and the forecaster each assign a 1% probability to the question resolving above the upper boundary.

Questions can have open or closed boundaries on either end of the specified range.

Closed Boundaries

A closed boundary means forecasters are restricted from assigning a probability beyond the specified range. Closed boundaries are appropriate when a question cannot resolve outside the range. For example, a question asking what share of the vote a candidate will get with a range from 0 to 100 should have closed boundaries because it is not possible for the question to resolve outside the range. Closed boundaries restrict forecasters from assigning probabilities outside the specified range.

Open Boundaries

An open boundary allows a question to resolve outside the range. For example, a question asking what share of the vote a candidate will get with a range from 30 to 70 should have open boundaries because it is possible that the candidate could get less than 30% of the vote or more than 70%. Open boundaries should be specified even if it unlikely that the vote share falls outside the range, because it is theoretically possible that vote shares outside the specified range can occur.

Forecasters can assign probabilities outside the range when the boundary is open by moving the slider all the way to one side. The weight can also be lowered or increased to adjust the probability assigned to an out of bounds resolution.

Multiple Components

In the images shown above you can see that the user has assigned two probability distributions. Up to five logistic distributions can be added using the “Add Component” button. The relative weight of each can be adjusted using the “weight” slider below each component.

Asymmetric Predictions

The probability distributions can be entered asymmetrically by dragging one side of the distribution slider. On desktop computers users can hold the shift key while dragging a slider to force the distribution to be symmetric.

How is the Community Prediction calculated?

The Community Prediction is a consensus of recent forecaster predictions. It's designed to respond to big changes in forecaster opinion while still being fairly insensitive to outliers.

Here's the mathematical detail:

- Keep only the most recent prediction from each forecaster.

- Assign them a number \(n\), from oldest to newest (oldest is \(1\)).

- Weight each by \(w(n) \propto e^\sqrt{n}\) before being aggregated.

- For Binary Questions, the Community Prediction is a weighted median of the individual forecaster probabilities.

- For Numeric and Date Questions, the Community Prediction is a weighted mixture (i.e. a weighted sum) of the individual forecaster distributions.

- The particular form of the weights means that approximately \(\sqrt{N}\) forecasters need to predict or update their prediction in order to substantially change the Community Prediction on a question that already has \(N\) forecasters.

Users can hide the Community Prediction from view from within their settings.

What is the Metaculus Prediction?

The Metaculus Prediction is the Metaculus system's best estimate of how a question will resolve. It's based on predictions from community members, but unlike the Community Prediction, it's not a simple average or median. Instead, the Metaculus Prediction uses a sophisticated model to calibrate and weight each user, ideally resulting in a prediction that's better than the best of the community.

For questions that resolved in 2021, the Metaculus Prediction has a Brier score of 0.107. Lower Brier scores indicate greater accuracy, with the MP slightly lower than the Community Prediction's Brier score of 0.108. you can see some of the fine details on our track record page.

Why can't I see the CP or MP?

When a question first opens, nobody can see the Community Prediction for a while, to avoid giving inordinate weight to the very first predictions, which may "ground" or bias later ones. The Metaculus Prediction is hidden until the question closes.

What Are Public Figure Predictions?

Public Figure Prediction pages are dedicated to collecting and preserving important predictions made by prominent public figures and putting them into conversation with Metaculus community forecasts. Each figure’s page features a list of predictions they made along with the source that recorded the prediction, the date the prediction was made, and related Metaculus questions. Public predictions are transparently presented alongside community forecasts in a manner that is inspectable and understandable by all, providing public accountability and additional context for the linked Metaculus questions.

A Public Figure is someone with a certain social position within a particular sphere of influence, such as a politician, media personality, scientist, journalist, economist, academic, or business leader.

What qualifies as a prediction?

A prediction is a claim or a statement about what someone thinks will happen in the future, where the thing predicted has some amount of uncertainty associated with it.

A Public Figure Prediction is a prediction made by the public figure themselves and not by figures who might represent them, such as employees, campaign managers, or spokespeople.

Who can submit Public Figure Predictions?

When predictions are made by public figures such as elected politicians, public health officials, economists, journalists, and business leaders, they become candidates for inclusion in the Public Figure Prediction system.

How can I submit a Public Figure Prediction?

From a Public Figure's page, click Report Prediction and then provide

- A direct quotation from the prediction news source

- The name of the news source

- A link to the news source

- The prediction date

- At least one related Metaculus question

If the Public Figure does not yet have a dedicated page, you can request that one be created by commenting on the Public Figures Predictions discussion post. Tag @christian for a faster moderation process.

What are the criteria for selecting linked Metaculus questions related to the Public Figure Prediction?

Depending on the level of specificity and clarity of the Public Figure Prediction, a linked Metaculus question might resolve according to the exact same criteria as the prediction. For example, Joe Biden expressed that he plans to run for reelection. This Metaculus question asks directly whether he will run.

Linked questions are not required, however, to directly correspond to the public figure’s prediction, and this question on whether Biden will be the Democratic nominee in 2024 is clearly relevant to public figure claim, even as it’s further away from the claim than asking whether Biden will run. Relevant linked questions shed light on, create additional context for, or provide potential evidence for or against the public figure’s claim. Note that a question being closed or resolved does not disqualify it from being linked to the prediction.

On the other hand, this question about whether the IRS designates crypto miners as ‘brokers’ by 2025 follows from Biden’s Infrastructure Investment and Jobs Act, but beyond the Biden connection, it fails to satisfy the above criteria for a relevant linked question.

Which sources are acceptable?

News sources that have authority and are known to be accurate are acceptable. If a number of news sources report the same prediction, but the prediction originated from a single source, using the original source is preferred. Twitter accounts or personal blogs are acceptable if they are owned by the public figure themselves

Who decides what happens next?

Moderators will review and approve your request or provide feedback.

What happens if a public figure updates their prediction?

On the page of the prediction, comment the update with the source and tag a moderator. The moderator will review and perform the update if necessary.

I am the Public Figure who made the prediction. How can I claim this page?

Please email us at support at metaculus.com.

What is "Reaffirming" a prediction?

Sometimes you haven't changed your mind on a question, but you still want to record your current forecast. This is called "reaffirming": predicting the same value you predicted before, now. It is also useful when sorting question by the age of your latest forecast. Reaffirming a question sends it to the bottom of that list. You can reaffirm a question from the normal forecast interface on the question page, or using a special button in feeds. On question groups, reaffirming impacts all subquetsions on which you had a forecast, but not the others.

On question groups, reaffirming impacts all subquetsions on which you had a forecast, but not the others.

Scores and Medals

What are scores?

Scores measure forecasting performance over many predictions. Metaculus uses both Baseline scores, which compare you to an impartial baseline, and Peer scores, which compare you all other forecasters. We also still use Relative scores for tournaments. We do not use the now-obsolete Metaculus points, though they are still computed and you can find them in the question page.

Learn more in the dedicated Scores FAQ.

What are medals?

Medals reward Metaculus users for excellence in forecasting accuracy, insightful comment writing, and engaging question writing. We give medals for placing well in any of the 4 leaderboards: Baseline Accuracy, Peer Accuracy, Comments, and Question Writing. Medals are awarded every year. Medals are also awarded for Tournament performance.

Learn more in the dedicated Medals FAQ.

Metaculus Journal

What is the Metaculus Journal?

The Metaculus Journal publishes longform, educational essays on critical topics like emerging science and technology, global health, biosecurity, economics and econometrics, environmental science, and geopolitics—all fortified with testable, quantified forecasts.

If you would like to write for the Metaculus Journal, email christian@metaculus.com with a resume or CV, a writing sample, and two story pitches.

What is a fortified essay?

In November 2021 Metaculus introduced a new project - Fortified Essays. A Fortified Essay is an essay that is “fortified” by its inclusion of quantified forecasts which are justified in the essay. The goal of Fortified Essays is to leverage and demonstrate the knowledge and intellectual labor that went into answering forecasting questions while also putting the forecasts into a larger context.

Metaculus plans to run Fortified Essay Contests regularly as part of some tournaments. This additional context deriving from essays is necessary, because a quantified forecast in isolation may not provide the information required to drive decision-making by stakeholders. In Fortified Essays, writers can explain the reasoning behind their predictions, discuss the factors driving the predicted outcomes, explore the implications of these outcomes, and can offer their own recommendations. By placing forecasts into this larger context, these essays are better able to help stakeholders deeply understand the relevant forecasts and how much weight to place on them. The best essays will be shared with a vibrant and global effective altruism community of thousands of individuals and dozens of organizations.

Miscellany

What are Metaculus Pro Forecasters?

For certain projects Metaculus employs Pro Forecasters who have demonstrated excellent forecasting ability and who have a history of clearly describing their rationales. Pros forecast on private and public sets of questions to produce well-calibrated forecasts and descriptive rationales for our partners. We primarily recruit members of the Metaculus community with the best track records for our Pro team, but forecasters who have demonstrated excellent forecasting ability elsewhere may be considered as well.

If you’re interested in hiring Metaculus Pro Forecasters for a project, contact us at support@metaculus.com with the subject "Project Inquiry".

Metaculus selects individuals according to the following criteria:

- Have scores in the top 2% of all Metaculus forecasters.

- Have forecasted on a minimum of 75+ questions that have been resolved.

- Have experience forecasting for a year or more.

- Have forecasted across multiple subject areas.

- Have a history of providing commentary explaining their forecasts.

Does Metaculus have an API?

The Metaculus API can be found here: https://www.metaculus.com/api2/schema/redoc/

How do I change my username?

You can change your name for free within the first three days of registering. After that you'll be able to change it once every 180 days.

I’m registered. Why can’t I comment on a question?

In an effort to reduce spam, new users must wait 12 hours after signup before commenting is unlocked.

Understanding account suspensions.

Metaculus may—though this thankfully occurs very rarely—issue the temporary suspensions of an account. This occurs when a user has acted in a way that we consider inappropriate, such as when our terms of use are violated. At this point, the user will be received a notice about the suspension, and be made aware that continuing this behaviour is unacceptable. Temporary suspensions serve as a warning to users that they are few infractions away from receiving a permanent ban on their account.

Why can I see the Community Prediction on some questions, the Metaculus Prediction on others, and no prediction on some others?

When question first opens, nobody can see the Community Prediction for a while, to avoid giving inordinate weight to the very first predictions, which may "ground" or bias later ones. Once the Community Prediction is visible, the Metaculus Prediction is hidden until the question closes.

What is NewsMatch?

NewsMatch displays a selection of articles relevant to the current Metaculus question. These serve as an additional resource for forecasters as they discuss and predict on the question. Each article is listed with its source and its publication date. Clicking an article title navigates to the article itself. Up and downvoting allows you to indicate whether the article was helpful or not. Your input improves the accuracy and the usefulness of the model that matches articles to Metaculus questions.

The article matching model is supported by Improve the News, a news aggregator developed by a group of researchers at MIT. Designed to give readers more control over their news consumption, Improve the News helps readers stay informed while encountering a wider variety of viewpoints.

Articles in ITN's database are matched with relevant Metaculus questions by a transformer-based machine learning model trained to map semantically similar passages to regions in "embedding space." The embeddings themselves are generated using MPNet.

What are Community Insights?

Community Insights summarize Metaculus user comments on a given question using GPT-4. They condense recent predictions, timestamped comments, and the current community prediction into concise summaries of relevant arguments for different forecasts on a given question. Forecasters can use them to make more informed decisions and stay up-to-date with the latest insights from the community.

Community Insights are currently available on binary and continuous questions with large comment threads and will update regularly as new discussion emerges in the comments. If you have feedback on these summaries — or would like to see them appear on a wider variety of questions — email support@metaculus.com.

If you find a Community Insights summary to be incorrect, offensive, or misleading please use the button at the bottom of the summary to “Flag this summary” so the Metaculus team can address it.

Can I get my own Metaculus?

Yes! Metaculus has a domain system, where each domain (like "example.metaculus.com") has a subset of questions and users that are assigned to it. Each question has a set of domains it is posted on, and each user has a set of domains they are a member of. Thus a domain is a flexible way of setting a particular set of questions that are private to a set of users, while allowing some questions in the domain to be posted also to metaculus.com. Domains are a product that Metaculus can provide with various levels of support for a fee; please be in touch for more details.

How can I help spread the word about Metaculus?